Electric Vehicles Are Embracing New Trends in 2024 - January 26, 2024

The automotive world is rapidly evolving as today’s cars gain access to technology that was impossible just a decade ago. In China, two automakers are rolling out electric passenger vehicles powered by sodium-ion batteries. The move comes in response to fluctuations in lithium prices that put pressure on the EV market.

Meanwhile, AI remains the biggest trend in all technology. Volkswagen is partnering with a startup called Cerence to bring ChatGPT features into its vehicle lineup. Drivers will be able to interact with the AI assistant for both simple and complex queries while on the road.

Two Chinese Automakers Launch EVs With Sodium-Ion Batteries

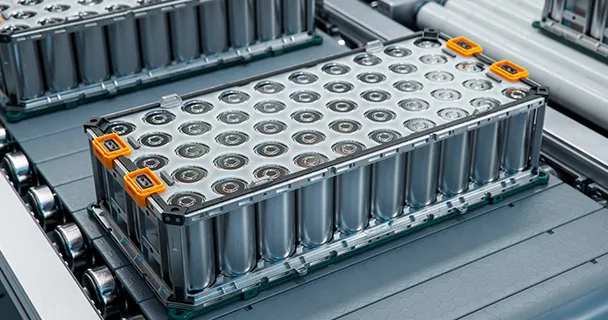

With the popularity of electric vehicles (EVs) reaching new heights, the batteries that power them are coming under the microscope. Though it may seem trivial to those on the outside, the type of battery under the hood is a significant factor in determining both driving range and the total cost of the vehicle. In China, the world’s largest EV market, sodium-ion-based batteries are making their debut to upset the reign of lithium-ion batteries.

Recent reports highlight two new EVs powered by sodium-ion batteries arriving on the market. The first comes from JMEV, an EV offshoot of the Chinese Jiangling Motors Group. It boasts a sodium-ion battery manufactured by China’s Farasis Energy with an energy density between 140Wh/kg and 160Wh/kg. For comparison, most lithium-ion batteries feature an energy density between 260Wh/kg and 270Wh/kg.

Notably, the JMEV vehicle has a driving range of 251 kilometers (about 156 miles). This range makes it an acceptable choice for daily commuting or cross-city travel, though it won’t be winning any awards for its longevity. Compared to leading lithium-ion EVs, which boast ranges over 300 miles per charge, this sodium-ion EV is underwhelming.

However, it’s an exciting new addition to the market given the potential for growth and savings in the sodium-ion battery segment. Compared to their lithium-based cousins, sodium-ion batteries feature superior discharge capacity retention. The JMEV model’s is a respectable 91%.

Meanwhile, the second Chinese EV to feature a sodium-ion battery comes from Yiwei, a brand belonging to the JAC Group. The battery itself is made by Hina battery and gives the vehicle a range of 252 kilometers.

Sodium-ion battery technology is expected to improve significantly over the next few years. Farasis claims the energy density of its batteries will increase to between 180Wh/kg and 200Wh/kg by 2026. It claims this will make the battery more relevant for a broader range of applications, including energy storage and battery swapping.

Another factor to consider is the variable price of lithium and its ripple effect on the EV market. In 2022, sharp price hikes spurred manufacturers to devote more resources to sodium-ion battery research and production. Unsurprisingly, Farasis and Hina aren’t the only firms making a push into the market. China’s CATL and Eve Energy also launched their sodium-ion technology in response to the rising prices of lithium.

Given that China imports roughly 70% of its lithium, price swings are a significant factor for the industry to consider. Since experts believe sodium-ion battery prices will drop as they enter mass production, these could be a viable alternative for the industry.

However, experts warn that slumping lithium prices will quell interest in sodium-ion battery research and adoption. How this trend plays out in 2024 will largely depend on lithium prices—not the booming demand for EVs in China and beyond.

Volkswagen Partners with Cerence to Bring ChatGPT Features to Its Vehicles

Just when it seemed like ChatGPT was everywhere, the artificial intelligence (AI) chatbot is expanding its presence into cars. Thanks to a partnership between Volkswagen and Cerence, an AI-focused tech startup, ChatGPT will soon be integrated into the carmaker’s Ida voice assistant. The news, announced during CES 2024, arrives with a mixed reception from drivers.

For those wary of integrating AI with their vehicle’s native software, there is a bright side. The car’s Ida assistant will still handle tasks like voice-powered navigation and climate control changes. However, Cerence’s Chat Pro software will soon be able to handle more complex queries through the cloud.

Drivers can pose natural language, open-ended queries like “Find me a good burger restaurant nearby.” Cerence’s software then processes the request and sends an answer back through the Ida assistant or the built-in navigation system.

As one might expect from ChatGPT, the integration can also handle more nuanced queries. A recent Volkswagen ad showed Ewan McGregor using “The Force” (the Cerence ChatGPT integration) to inquire about wearing a kilt to an upcoming event. The software supplied a detailed response advising the actor on the appropriateness of wearing kilts to formal gatherings. The ChatGPT integration will also be able to offer interactions like providing trivia questions for long road trips or reading a bedtime story for a child in the back seat.

According to Cerence, the ChatGPT integration gives drivers access to “fun and conversational chitchat” at the push of a button or voice command prompt. Looking ahead, Volkswagen and Cerence plan to continue their collaboration to add more ChatGPT features to the Ida voice assistant.

Drivers can expect ChatGPT functionality to arrive in the second quarter of 2024. The update will roll out over the air, and Volkswagen claims it will be “seamless.” An extensive lineup of Volkswagen vehicles, including both electric and gas-powered models, will support the integration. ID 3, ID 4, ID 5, ID 7, Tiguan, Passat, and Golf will be the first to receive the update.

Of course, while this is an exciting update for Volkswagen fans, there is the question of whether cars need access to ChatGPT’s features. The last thing the roads need is more distracted drivers. While drivers can interact with ChatGPT hands-free, it’s easy to envision someone getting a bit too involved with answering trivia questions behind the wheel and losing focus on the road. At this time, it’s unclear whether Volkswagen and Cerence have a plan in place to address these safety concerns.

Despite this, similar features will likely arrive in vehicles of other makes in the days ahead. ChatGPT is currently one of the hottest topics of conversation in the tech world, and automakers are likely scrambling to catch up with Volkswagen and add it to their vehicles.

AI Preparing for Another Busy Year - January 9, 2024

The buzzing excitement of a new year always brings plenty of change and interesting perspectives for the chip sector. From new product releases to outlooks on the latest trends, manufacturers and buyers are preparing for a busy year. AI promises to be a key technology in 2024, and Intel’s latest Xeon processor, which boasts AI acceleration in every core, is a testament to it.

Meanwhile, memory prices are continuing their surge from the end of 2023 thanks to sustained production cuts from the world’s biggest manufacturers. As buyers scramble to secure inventory amid fresh demand from China’s smartphone market, the price trend for memory chips looks bright.

Intel’s Latest Xeon Processors Feature AI Acceleration in All 64 Cores

Computing headlines in 2023 were dominated by artificial intelligence (AI). Experts believe the same will be true in 2024 as tech leaders around the globe further expand the integration of AI with traditional hardware and software. Intel’s forthcoming 5th Gen Xeon processors are no exception. The new chips are built with AI acceleration in each of their 64 cores to improve both efficiency and performance. The chipmaker’s latest breakthrough doesn’t stand alone. Adding AI to traditional computing hardware

is one of the hottest trends in chipmaking. It promises to play a central role in the industry over the coming years as more ways for end users to harness the power of AI on their devices are introduced.

However, experts have also warned that increased usage of AI puts a hefty strain on the world’s power supply. Many fear the technology could consume more power than entire countries as its popularity skyrockets. One way to offset this power-hungry tech is through more efficient chips—a feature Intel highlighted during the 5th Gen Xeon unveiling.

Intel says the new chips will enable a 36% higher average performance per watt across workloads. This is a significant energy reduction and will surely entice buyers who closely monitor their cost of ownership. Over the long run, systems using Intel’s newest Xeon chips will use far less energy than those running on older processors.

Despite being more efficient, the new Xeon processors also deliver a notable performance increase of 21%, on average, compared to the previous generation. For AI work, such as large-language model (LLM) training, the chips boast a 23% generational improvement, according to Intel executive vice president and head of the Data Center and AI Group, Sandra Rivera. For LLMs under 20 billion parameters, the new Xeon chip delivers less than 100-millisecond latency, enabling faster performance for model training and generative AI projects.

Per Intel’s press release announcing the new chip, including AI acceleration in every core will “address demanding end-to-end AI workloads before customers need to add discrete accelerators.”

Indeed, upgradeability is another key selling point for the 5th Gen Xeon processors. The chips are pin and software compatible with the previous generation.

Rivera says, “As a result of our long-standing work with customers, partners and the developer ecosystem, we’re launching 5th Gen Xeon on a proven foundation that will enable rapid adoption and scale at lower TCO.”

The first equipment featuring Intel’s new Xeon chips will arrive in the first quarter of 2024. Buyers can expect offerings from leading OEMs, including Lenovo, Cisco, Dell, HPE, IEIT Systems, Super Micro Computer, and more.

As AI adoption continues, expect to see more acceleration built into next-gen silicon. While it’s unclear how far the AI trend will advance, the technology isn’t going away anytime soon. By contrast, it seems AI will play a pivotal role in the future of computing and humanity. Don’t be fooled by the feeling that AI is already everywhere. According to Intel CEO Pat Gelsinger, the technology is likely “underestimated.”

With that perspective leading the way, expect Intel to focus more intensely on AI chips in the years ahead. The 5th Gen Xeon processor is a meaningful step in this direction, and more will surely follow.

NAND Flash Wafers See 25% Surge Amid Sustained Production Cuts

Aggressive production cuts are positively impacting memory chip prices practically across the board, according to new data from TrendForce and insights from industry experts. While the likes of Samsung, SK Hynix, and Micron have repeatedly slashed production—particularly of NAND Flash modules—buyers have burned through their inventories and are now rushing to secure more.

As the year begins, peak season demand from the holiday production cycle is waning, but overall demand for memory chips hasn’t slowed down. Experts point to persistent production cuts as the primary driver creating a supply and demand imbalance. As a result, memory chip makers can dramatically raise prices.

In November alone, NAND Flash wafers saw their price skyrocket by 25%, according to data from TrendForce. This rise closely followed Samsung’s decision to slash its total production capacity by 50% in September. While the rise is painful for buyers looking to source new memory components, experts have gained confidence in the pricing of those chips.

Interestingly, production cuts aren’t the only factor behind the price increase. Experts point to the surging Chinese smartphone market as another noteworthy factor. Led by Huawei and its Mate 60 series, smartphone manufacturers in China are working to regain market share after falling behind thanks to chip export restrictions put in place by the U.S. and its allies. As the Chinese chip sector seeks to reestablish its footing, the country’s device makers are aggressively boosting their production goals and aim to expand their output further in the new year.

Both in China and elsewhere, memory chip buyers have no choice but to accept higher wafer prices as they race to meet demand from consumers. Moreover, industry sources cited by TrendForce report that inventories across the board are shrinking rapidly, forcing customers to bite the bullet and place even more orders at higher prices. One source is quoted as saying, “Everyone just keeps scrambling for inventory.”

However, the longevity of the rising memory prices may be limited. Industry rumors suggest memory manufacturers may be preparing to increase production again in response to downstream demand. While this hasn’t been confirmed by any of the largest memory players, a production uptick does make sense for the first half of 2024.

No one wants a repeat of the sweeping shortages and subsequent round of panic buying seen in the past few years. A stable supply chain is far more beneficial in the long run for all involved. Yet, memory makers also can’t afford to continue selling their chips at incredibly low prices. This is a tricky balance to pull off and it will be interesting to see how the industry handles it in the coming months.

Notably, if production increases again, prices will likely slow their rise and stabilize around their current level. In the days ahead, both buyers and manufacturers must carefully monitor memory prices and inventory levels to prevent disruption and ensure profitability.

.jpg)

New Developments Coming in 2024 - December 15, 2023

Partnerships are the name of the game in today’s intertwined and convoluted chip industry. From securing supply chains to advancing manufacturing technology, more chipmakers are teaming up today than ever before.

Meta and MediaTek have recently announced a collaboration to develop next-gen chips for AR/VR smart glasses. Meanwhile, Intel has chosen TSMC to produce its Lunar Lake PC chips in a surprising move for its forthcoming mainstream platform.

MediaTek, Meta to Collaborate on AR/VR Chips for Next-Gen Smart Glasses

Although artificial intelligence (AI) has dominated headlines for the past few years, many other technologies are also experiencing exciting growth and development. Virtual reality (VR) and augmented reality (AR) have come a long way, primarily thanks to chip advancements that allow them to operate untethered from external computers.

Meta has rapidly cemented itself as a leader in this space and is expected to hold 70% of the overall market share in 2023 and 2024. Both its current Quest VR headsets and more experimental augmented reality glasses are far more refined than models from years past. Thanks to the accelerating adoption of AI, these devices are expected to get much smarter.

Now, Meta has announced a collaboration with MediaTek to develop AR and VR semiconductors for its next-gen smart glasses. Notably, Meta has relied on Qualcomm chips until this point to power the last two generations of its smart glasses.

According to TrendForce, the move is likely an effort by Meta to decrease costs and secure its supply chain. Meanwhile, the partnership will help MediaTek challenge Qualcomm in the AR/VR space and expand its footprint.

This fall, Meta introduced the second generation of its smart glasses product—the first came as part of a collaboration with Ray-Ban. The new glasses will feature improved recording and streaming capabilities to further cater to their primary audience of social media users. They will also take advantage of generative AI advancements by integrating Meta’s AI Voice Assistant powered by the Llama2 model.

MediaTek’s expertise in building efficient, high-performance SoCs will be essential to this ambition. AR glasses have traditionally been clunky, which has hindered their adoption. A sleeker design that doesn’t sacrifice advanced capabilities could change this.

Meta has been working diligently to develop chips in-house, including through a collaboration with Samsung. Its partnership with MediaTek can be seen as a risk management strategy in the short term as it continues to move toward chip independence. As smart glasses currently come with price tags as high as $300 that may deter consumers, the move may also be a way to cut production costs thanks to MediaTek’s competitive pricing.

Interestingly, despite the buzz around its AR and VR products, Meta has shipped just 300,000 pairs of its original model. With an anticipated launch of its next-gen smart glasses in 2025, it remains unclear how the market will receive them. Moreover, there are no concrete indications that the AR device market has gained significant traction.

Despite this, MediaTek’s decision to collaborate with Meta extends beyond the latter’s line of devices. By strategically integrating itself into Meta’s chip supply chain, MediaTek can cut into Qualcomm’s dominant market share. In a sense, the move should be viewed as a strategic move for the future rather than a quick way to add revenue now. Should Meta further increase its already-large market share or the general adoption of AR and VR devices increase, MediaTek could benefit greatly.

The Taiwanese firm has made a big push into the VR and AR market in recent years and its latest move is a signal that it plans to continue on this path. In 2022, a MediaTek VR chip was used in Sony’s PS VR2 headset. Now, thanks to this partnership with Meta, MediaTek chips could become a foundational part of the AR and VR device market over the coming years.

Intel Chooses TSMC’s 3nm Process for Lunar Lake Chip Production

Intel’s upcoming Lunar Lake platform, designed for next-gen laptops releasing in the latter half of next year, is making waves in the PC industry thanks to its innovative design and solid specs. After years of keeping production of its mainstream PC chips in-house, Intel is looking outward for its latest platform. The U.S. chipmaker is reportedly partnering with TSMC for all primary Lunar Lake chip production using the Taiwanese firm’s 3nm process.

Notably, neither Intel nor TSMC has directly commented on the partnership after leaks of its internal design details began spreading last month. However, discussions between industry experts on social media and recent reports from TrendForce bring validity to rumors.

Lunar Lake features a system-on-chip (SoC) design comprised of a CPU, GPU, and NPU. Then, Intel’s Foveros advanced packaging technology is used to join the SoC with a high-speed I/O chip. A DRAM LPDDR5x module is also integrated on the same substrate. TSMC will reportedly produce the CPU, GPU, and NPU with its 3nm process, while the I/O chips will be made with its 5nm process.

Mass production of Lunar Lake silicon is expected to start in the first half of 2024. This timeline matches the resurgence in the PC market most industry analysts project for the back half of next year. After slumping device sales over the past several consecutive quarters, a turnaround is expected thanks to demand for new AI features and a wave of consumers who purchased devices during the pandemic being ready to upgrade.

This latest partnership is far from the first time Intel and TSMC have worked together, but the fact that it involves Intel’s mainstream PC chip line is noteworthy. The latter produced Intel’s Atom platform more than ten years ago. However, Intel has only recently started outsourcing chips for its flagship platforms, including the GPU and high-speed I/O chips used in its Meteor Lake platform.

According to TrendForce, the decision to move away from in-house production and trust TSMC with the job hints at future collaborations. What this could look like remains to be seen, but TSMC may soon find itself producing Intel’s mainstream laptop platforms more frequently.

Proactive Sourcing Strategies for All Components - December 1, 2023

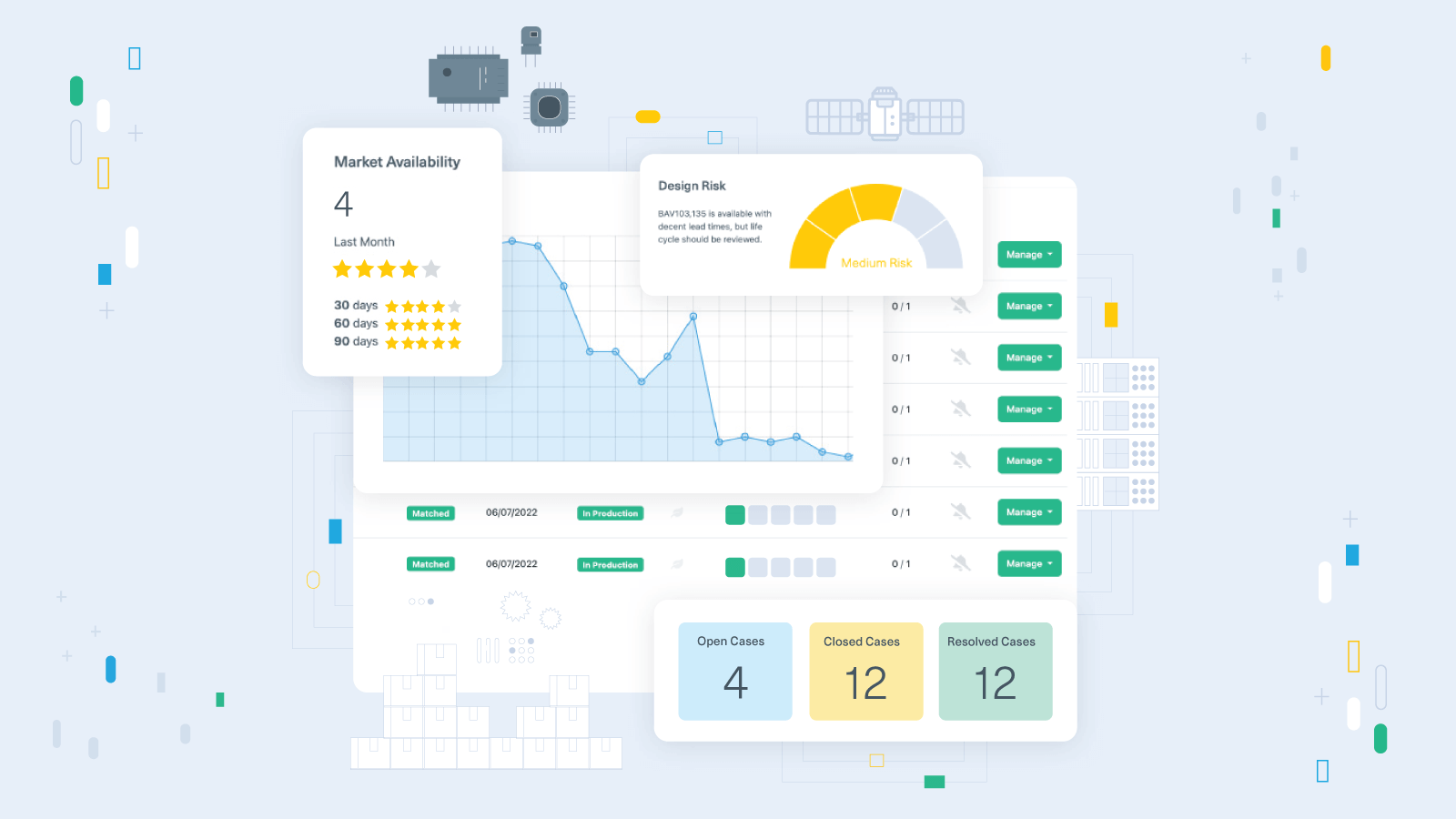

The chip industry is positioned for a stretch of significant growth over the coming decade. Technologies like artificial intelligence promise to revolutionize the supply chain and daily life. However, this exciting shake-up means OEMs must carefully strategize the best way to source components—both advanced and legacy node chips—to prevent costly production delays.

Meanwhile, companies are racing to improve their AI capabilities. Training new models is a key priority and is being benchmarked with a new test designed for measuring the efficiency of training generative AI. Unsurprisingly, Nvidia leads the way, but both Intel and Google have made big improvements.

OEMs Must Prepare to Navigate Supply Chain Shifts Across Node Sizes as Chip Industry Evolves

For original equipment manufacturers (OEMs), the semiconductor supply chain is simultaneously one of the most difficult sectors to source inventory from yet also more accessible than ever. With supply and demand imbalances as well as inventory shortages and gluts raging over the past few years, the industry has been anything but stable. As chipmakers work to expand the production of advanced node chips, legacy nodes are falling out of favor even as demand for them remains steady.

This means OEMs must be mindful of sourcing components across node sizes and plan their strategies according to unique trends affecting each one. Over the next decade, technologies like artificial intelligence (AI), 5G, and electric vehicles promise to redefine the chip world. However, the aerospace, defense, and healthcare sectors continue to rely on legacy chips for their essential operations.

The latter is likely to cause problems according to many industry analysts. As semiconductor manufacturers shift their production strategies toward more advanced nodes, investment in legacy node fabs has decreased considerably. Certain firms, like GlobalFoundries, have capitalized on this by focusing their efforts on older chips. But the industry is largely moving on.

This means demand for legacy components is poised to outpace supply over the next few years. At a time when many legacy chips are also more difficult to find—often only available through refabrication—OEMs who rely on them are facing an incredibly challenging period for component sourcing.

Meanwhile, the majority of chipmakers are being lured in by the high profit margins and demand for cutting-edge chips designed to power AI and EV applications. They continue to invest billions of dollars each year to expand production capacity at existing facilities or build new fabs. The latter takes time, though, often years before a new fab is up and running. OEMs will need to be patient while waiting for shortages to resolve.

Moreover, OEMs must act now to adopt forward-looking strategies for sourcing essential components across node sizes. A diverse plan utilizing a more robust network of suppliers is an effective tactic for insulating against the ebbs and flows of the supply chain. This includes working with both local and global suppliers. Many carmakers, particularly those in the EV space, have adopted this tactic by forging relationships directly with chip manufacturers to guarantee inventory.

Some experts also recommend OEMs with the capacity to do so purchase extra inventory ahead of potential shortages. While building up a safety stock is expensive, doing so can prevent costly production delays down the line. Of course, on a larger scale, this trend can also further disrupt the supply chain as inventory gluts push down prices—as is currently being seen in the memory chip market.

Regardless of the approach OEMs take, the key is to remain agile. Being ready to adapt to changes in the market at a moment’s notice and predict them before they happen is essential in today’s fast-paced market. Utilizing advanced analytics tools and data from every point on the supply chain creates a bigger picture and allows for more accurate decision making.

Over the next several years, the chip industry is prepared to through another period of rapid growth and change thanks to AI and electric cars. As demand for different nodes changes with it, OEMs must be prepared to evaluate and adjust their sourcing strategies at multiple levels or risk falling behind. Meanwhile, OEMs relying on legacy components must be prepared to face a shortage and adopt clever solutions to secure enough inventory.

Nvidia Continues to Dominate Generative AI Training, But Intel and Google are Closing In

Training generative artificial intelligence (AI) systems is no easy task. Even the world's most advanced supercomputers take days to complete the process—a timeline few other projects can claim. Less advanced systems require months to do the same work, putting into perspective the vast amount of computing power needed to train large language models (LLMs) like GPT-3.

To benchmark progress in LLM training efficiency over time, MLPerf has designed a series of tests. Since launching five years ago, the performance of AI training in these tests has improved by a factor of 49 and is now faster than ever. MLPerf added a new test for GPT-3 earlier this year and 19 companies and organizations submitted results. Nvidia, Intel, and Google were the most noteworthy among them.

Each of the three took the challenge quite seriously and devoted massive systems to running the test. Nvidia’s Eos supercomputer was the largest and blew competitors away as expected. The Eos system is comprised of 10,752 H100 GPUs—the leading AI accelerator on the market today by practically every metric. Up against MLPerf’s GPT-3 training benchmark, it completed the job in just four minutes.

Eos features three times as many H100 GPUs as Nvidia’s previous system, which allowed it to achieve a 2.8-fold performance improvement. The company’s director of AI benchmarking and cloud computing, Dave Salvatore, said, “Some of these speeds and feeds are mind-blowing. This is an incredibly capable machine.”

To be clear, the MLPerf test is a simulation that only requires the training to be completed up to a key checkpoint. The computer reaching it means the rest of the training could have been completed with satisfactory accuracy without the need to actually do it. This makes the test more accessible for smaller companies who don’t have access to a 10,000+ GPU supercomputer while also saving both computing resources and time.

Given this design, Nvidia Eos’ time of four minutes means it would take the system about eight days to fully train the model. A smaller system built with 512 Nvidia H100 GPUs, a much more realistic amount for most supercomputers, would take about four months to do the same task.

While it should come as no surprise that Nvidia is dominating the AI space given its prowess in the AI chip sector, Intel and Google are making strides to close the gap. Both firms have made big improvements in their generative AI training capabilities in recent years and posted respectable results in the MLPerf test.

Intel’s Guadi 2 accelerator chip featured its 8-bit floating-point (FP8) capabilities for the test, unlocking improved efficiency over preceding versions. Enabling lower precision numbers has led to massive improvements in AI training speed over the past decade. It’s no different for Intel, which has seen a 103% reduction in time-to-train. While this is still significantly slower than Nvidia’s system, it’s about three times faster than Google’s TPUv5e.

However, Intel argues that the Gaudi 2 accelerator’s lower price point keeps it competitive with the H100. From a price-to-performance standpoint, this is valid. The next-generation Gaudi 3 chip is expected to enter volume production in 2024. Given that it uses the same manufacturing process as Nvidia’s H100, hopes are high for its performance.

Over the coming years, the importance of generative AI training will only grow. Advancements like those being made by Nvidia, Intel, and Google are expected to pave the way for a new age of computing. Thanks to benchmarks like MLPerf’s training tests, we can watch the results come through in real-time.

AI Helping Pull Memory and Mobile Out of the Depths - Nov 17, 2023

Experts have been quick to tout the revolutionary potential of artificial intelligence (AI). So far, the technology is living up to the hype. Industries across the spectrum are prioritizing AI in their forward-looking plans and chip suppliers are racing to keep up with demand being sparked by the rise of new AI products.

For the oft-beleaguered memory chip industry, this growth is a welcome change—and is driving price increases in the fourth quarter. Meanwhile, smartphone makers are working to implement generative AI into their latest devices as industry leaders claim the tech could be as influential as smartphones themselves.

DRAM, NAND Prices to Rise in Q4 with Continued Growth in Q1 Next Year

The memory chip market has gone through plenty of turmoil over the last few years. For the past several months, experts have been pointing to signs of recovery even as the industry seemed to bottom out. Now, thanks to numerous market influences, the numbers are starting to echo this sentiment as the memory sector heads for a turnaround in the new year.

According to a recent TrendForce report, Q4 memory chip prices are expected to rise by double digits. Mobile DRAM, interestingly, is leading the way with a projected increase of 13-18%. Meanwhile, NAND Flash isn’t far behind with prices for eMMC and UFS components expected to jump by 10-15% in the quarter.

Typically, mobile DRAM takes a backseat to traditional DRAM chips, but several factors are buoying its price in the fourth quarter. One of the largest is Micron’s decision to hike prices by more than 20% for many of its leading memory products. Samsung’s recent move to slash production in response to an industry-wide supply glut is also driving memory chip prices upward.

Meanwhile, the fall/winter peak season for new mobile devices tends to foster a favorable end of the year for memory chip makers. While this is a factor in the Q4 price rise, it isn’t the only one. Growth in the Chinese smartphone market—sparked by Huawei’s new Mate 60 series—is causing device makers to increase their production targets as consumer demand rises. Notably, the Mate 60 Pro features a 7nm 5G chip, making it the first such Chinese-made device to hit the market since global trade restrictions aimed to cut the country off from advanced chips and chipmaking equipment were implemented.

The wider chip industry is also prepared for a positive fourth quarter on the back of increasing electronics sales and IC sales. Notably, this is expected to drive year-over-year growth for key chip markets in Q4 following a stretch of declines over the previous five quarters.

Looking ahead, experts don’t expect the memory chip market’s resurgence in the fourth quarter to fizzle out in the new year. The first frame of 2024 promises to be another strong period of growth. Though TrendForce reports, expectations should be tempered since external factors like the Lunar New Year and off-season production lulls will likely slow the rise of prices.

Even so, experts believe demand will continue into the new year and that suppliers will maintain their conservative production strategies. Both will influence prices to stay high into Q1 2024 as the memory market continues its impressive recovery.

Xiaomi First to Smartphone Generative AI Features with Qualcomm’s Latest Snapdragon Chip

There was a time when foldable displays and 5G were expected to be turning points for smartphones. In a sense they were, but those technologies are already on the edge of becoming outdated thanks to the arrival of artificial intelligence (AI).

Qualcomm’s Snapdragon 8 Gen 3 mobile chipset launched in late October boasting on-device generative AI, which dramatically speeds up processing-intensive activities usually done on the cloud. Xiaomi simultaneously announced new flagship phones using the edge AI Qualcomm mobile platform.

Generative AI has boomed this year thanks in large part to the rise of ChatGPT. Its uncanny ability to create shockingly passable content in response to simple user prompts sets it apart from other forms of AI that require an expert-level understanding to use productively. Tech luminaries like Bill Gates and Sundar Pichai have said they think generative AI on smartphones could be as big as the dawn of the internet and smartphone technology itself.

Xiaomi founder and president Lu Weibing was the sole smartphone manufacturer to speak at the 2023 Qualcomm Snapdragon Technology Summit, where he showcased the Xiaomi 14 series.

"Xiaomi and Qualcomm have a long-term partnership, and Xiaomi 14 demonstrates our deep collaboration with Qualcomm. This is one of the first times a new platform and device launch together," Lu said.

The Xiaomi 14 and 14 Pro, presently exclusive to China pending a global launch, have AI capabilities ranging from those considered fairly basic, like summarizing webpages and generating videoconferencing transcripts, to ambitious features such as inserting the user into worldwide location and event scenes.

Qualcomm claims in its press release that Snapdragon 8 Gen 3 ushers in a new era of generative AI, “making the impossible possible.” The platform’s large language models (LLM) can run up to 20 tokens/sec—one of the fastest benchmarks in the smartphone industry—and generate images in a fraction of a second.

"AI is the future of the smartphone experience," said Alex Katouzian, senior vice president and general manager of Qualcomm's mobile, computing, and XR division.

Xiaomi has been working on AI since 2016 and maintains a dedicated AI team of over 3,000 people. Between that team and more than 110 million active users worldwide, Xiaomi’s digital assistant Xiao AI, which got upgraded with generative AI capabilities in August 2023, has the potential to continue evolving rapidly.

The digital assistant recognizes songs and objects, prevents harassing calls, suggests travel routes, and provides medication reminders. It can also control household appliances when integrated into Xiaomi’s line of smart home devices.

Xiaomi is the world's leading consumer IoT platform company and quietly the world’s third-largest smartphone manufacturer. The company connects 654 million smart devices and commands an impressive 14% global smartphone market share. Of course, its smartphone rivals in China have also been busy in the AI arena. Huawei, Oppo, and Vivo recently announced major upgrade plans for their own digital assistants.

The recently launched Google Pixel 8 also focused on AI with the company boasting its algorithms’ ability to pick out the best facial expressions in batches of group photos and easily paste them into a different image. That allows, for example, for a photograph to feature eight happy, smiling people when only four were actually smiling when the shutter snapped. Magic Compose, another feature that Google announced at its May 2023 developer conference, uses generative AI to suggest responses to text messages or rewrite responses in a different tone.

According to a recent report from Bloomberg, Apple is said to be developing a number of new AI features for the iPhone and other iOS products. Scheduled for release in 2024, iOS 18 is expected to include AI upgrades to Siri, Apple’s messaging app, Apple Music, Pages, Keynote, and more.

"There may be a killer use case that doesn't exist yet," said Luke Pearce, a senior analyst for CCS Insight, a tech research and advisory firm. "But that will come around the corner, surprise us all, and become completely indispensable.”

In the meantime, generative AI promises to be the smartphone industry’s next major milestone. As the technology continues to evolve, expect device makers to find more ways to incorporate it while taking advantage of the latest edge AI semiconductors.

New Shortage Coming for AI? - Nov 3, 2023

Artificial intelligence (AI) is arguably today’s most exciting technology. However, as with any new tech, adoption and development aren’t easy. Chipmakers and buyers alike are currently struggling with a shortage of AI components due to bottlenecks in advanced chip production lines at TSMC and SK Hynix. Those are expected to ease in 2024 thanks to aggressive capacity upgrades as AI represents a big opportunity for chip companies.

Meanwhile, Nvidia dominates the AI market thanks to its combined offerings of both hardware and software. Companies like AMD are attempting to challenge Nvidia’s power by exploring new software solutions—and acquiring startups who have done the same—to pair with their existing hardware offerings.

Production Bottlenecks Affecting the Chip Industry Amid AI Boom

The number of artificial intelligence (AI) applications has skyrocketed as generative AI takes the world by storm. Businesses of all shapes and sizes are exploring ways to integrate the technology into their operations—and use it to boost their bottom lines. However, the advanced chips needed to power large language models (LLMs) and AI algorithms are complex and difficult to produce.

Take Nvidia’s flagship H100 AI accelerator, for instance. Though orders for the chip are piling up, production is limited by the fact that TMSC is the only firm manufacturing the H100. As a result, availability of the GPU is limited by TSMC’s tight production capacity, particularly in CoWoS packaging. While the Taiwanese chipmaker expects to start filling orders in the first half of 2024, this bottleneck represents a larger issue for the AI industry.

As demand for AI products soars, chipmakers are scrambling to keep up. High-powered GPUs aren’t the only components experiencing bottleneck issues either. The smaller components inside them are also difficult to source given their novelty and scarcity.

HBM3 chips, the fastest memory components needed to support AI’s intense computations in the H100, are currently supplied exclusively by SK Hynix. The latter is racing to increase its capacity, but doing so takes time. Meanwhile, Samsung is hoping to secure memory chip orders from Nvidia by next year as it rolls out its own HBM3 offerings.

All said, data from DigiTimes points to a massive disparity between supply and demand in the AI server market. Analyst Jim Hsiao estimates the current gap is as wide as 35%. Even so, more than 172,000 high-end AI servers are expected to ship this year.

This move will be supported by chipmakers who have made (and continue making) significant increases in their production capacity. By mid-2024, TSMC’s CoWoS capacity is expected to increase to around 30,000 wafers per month—up from the 20,000 wafer capacity it projected for the new year this summer.

Demand for AI servers is led by massive buying initiatives from tech firms as they seek to revamp their computing operations with AI. Indeed, 80% of AI server shipments are sent to just five buyers—Microsoft, Google, Meta, Amazon (AWS), and Supermicro. Of these, Microsoft leads the way with a staggering 41.6% of the total market share for high-end AI servers.

Analysts believe this head start will make unseating Microsoft from its position of AI leadership very difficult in the coming years. A novel breakthrough in new technology or significant investment will be needed for the likes of Google and Meta to catch up given their respective 13.5% and 10.3% market shares.

Notably, the growing demand for AI servers is also causing a drop in shipments of traditional high-end servers. Top tech firms are moving away from these general-purpose machines as their budgets are pulled in multiple directions. Moreover, companies are choosing to buy directly from ODM suppliers rather than server brand manufacturers like Dell and HP. Experts predict ODM-direct purchases will account for 81% of total server shipments this year. This change has forced suppliers to adapt quickly to their business model being ripped out from under them.

Competition in the AI space is expected to heat up in 2024 and beyond thanks to capacity increases and profitable applications for AI continuing to be discovered. As the necessary hardware becomes more readily available, buyers will have more options and flexibility in their orders, leading to more competition among manufacturers. Though AI demand is likely to even out over time, this sector represents a massive opportunity for chipmakers over the coming decade. Expanding advanced chip production capacity is step one toward reliably fueling the AI transformation.

AMD Acquires Nod.AI to Bolster its AI Software Portfolio and Compete with Nvidia

In the race for dominance in the artificial intelligence (AI) sector, all roads run through Nvidia. The GPU maker saw its revenue spike by over 100% this year on the back of significant demand for AI products and its decade-long foresight to position itself as a market leader. While Nvidia’s hardware is certainly impressive, its fully-fledged ecosystem of AI software and developer support is what truly gives the firm such a massive advantage.

In an effort to catch up, AMD has announced its acquisition of Nod.AI. The startup is known for creating open-source AI software that it sells to data center operators and tech firms. Nod.AI’s technology helps companies deploy AI applications that are tuned for AMD’s hardware more easily. While details of the acquisition weren’t disclosed, Nod.AI has raised nearly $37 million to date.

In a statement, an AMD spokesperson said, “Nod.AI’s team of industry experts is known for their substantial contributions to open-source AI software and deep expertise in AI model optimizations.”

Nod.AI was founded in 2013 by Anush Elangovan, a former Google and Cisco engineer. He was joined by several noteworthy names from the tech industry, including Kitty Hawk’s Harsh Menon. The startup launched as an AI hardware company focusing on gesture recognition and hand-tracking wearables for gaming. However, it later pivoted to focus on AI deployment software, putting it closely in line with AMD’s needs.

The startup will be absorbed into a newly formed AI group at AMD which was created earlier this year. Currently, the group consists of more than 1,500 engineers and focuses primarily on software. AMD already has plans to expand this team with an additional 300 hires in 2023 and more in the coming years. It’s unclear if these figures include the employees coming in from Nod.AI or if AMD plans to hire even more staff in addition to them.

Nod.AI’s technology primarily focuses on reinforcement learning. This approach utilizes trial and error to help train and refine AI systems. For AMD, the acquisition is another tool in its belt of software offerings to tempt prospective buyers with. Reinforcement learning tools help customers deploy new AI solutions and improve them over time. With software designed to work well with AMD’s hardware, the process becomes more intuitive and leads to faster launch times.

While AMD is a long way from being able to compete with Nvidia’s software platform and developer support directly, it is taking steps in the right direction. The company is utilizing both internal investment and external acquisitions to do so, according to its president Victor Peng. Nod.AI is its second major acquisition in the AI software space in the past few months. Though AMD has no current plans for further moves, Peng noted that the firm is “always looking.”

Over the coming years, Nvidia’s lead in the AI space will be challenged. For AMD and others, combined innovation in both hardware and software will be essential to stealing market share from the current leader. AMD’s latest acquisition will help bolster its software portfolio in the short term with immediate effect while it continues to scale its long-term plans.

Leaders Pledge AI Protection as Satellites Make Chips in Space - October 20, 2023

The chip industry is already worth $500 billion and that figure is expected to double by the end of the decade. With so much on the line chipmakers are tech companies are doing everything they can to innovate and evolve.

For some, this means pursuing the mind-boggling power of artificial intelligence. With many still wary about the technology, though, government officials and tech leaders are teaming up to safely advance the AI field and earn trust. Others are looking elsewhere for innovation—including the stars. Could manufacturing semiconductor materials in space be the key to more efficient chips in the years to come? One U.K. startup thinks the answer is yes.

Tech Leaders Commit to Safely Advancing AI Through Collaboration and Transparency

It’s no secret artificial intelligence (AI) is reshaping the way the world interacts with technology. Thanks to the introduction of ChatGPT, machine learning and generative AI have gone mainstream with everyone from leading tech firms to average end users experimenting with its possibilities. But some are less enthusiastic about the potential applications for AI and remain wary of its risks.

As the ubiquity of AI extends to practically every industry, tech leaders and government officials are coming together to pledge their commitment to AI safety. Spearheaded by the Biden Administration, the voluntary call-to-action has been answered by 15 influential tech firms in recent months.

Earlier this year, the program was initially backed by seven AI companies. This included Google, Microsoft, OpenAI, Meta, Amazon, Anthropic, and Inflection. Now, eight more firms have pledged their intention to aid in the safe development of AI, including Adobe, IBM, Nvidia, Palantir, Salesforce, Cohere, Stability, and Scale AI.

The latter said in a recent blog post, “The reality is that progress in frontier model capabilities must happen alongside progress in model evaluation and safety. This is not only the right thing to do, but practical.”

“America’s continued technological leadership hinges on our ability to build and embrace the most cutting-edge AI across all sectors of our economy and government,” Scale added.

Indeed, companies around the world are exploring new ways to integrate artificial intelligence into every aspect of their operations. From logistics to customer service and research to development, AI promises to revamp the way work is done.

Even so, the safety concerns of AI loom large over any potential benefits. Much of this fear stems from not understanding how the models themselves work as they grow larger and more complex. Others fear it will soon be impossible to differentiate AI-generated content from human-generated work. Meanwhile, the cybersecurity risks associated with AI are numerous while solutions remain largely unexplored.

The Biden Administration’s plan to address these problems is threefold. Step one puts an emphasis on building AI systems that put security first. This includes investment in cybersecurity research and safeguards to prevent unwanted access to proprietary models. AI firms involved in the pledge have also committed to rigorous internal and external testing to ensure their products are safe before introducing them to the public. Given the rapid advancement of AI and industry-wide push to bring new applications to market first, this is an important guardrail.

Finally, the companies involved have made a commitment to earning the public’s trust. They aim to accomplish this in many ways, but ensuring users know when content is AI-generated is paramount. Other transparency guidelines include disclosing the capabilities of AI systems and leading research into how AI systems can affect society.

In a statement, the White House said, “These commitments represent an important bridge to government action, and are just one part of the Biden-Harris Administration’s comprehensive approach to seizing the promise and managing the risks of AI.”

The statement also mentions an executive order and bipartisan legislation currently being developed. Ultimately, though, no one company or government will dictate the future of AI. An ongoing collaboration is needed to ensure the technology is developed safely over the coming years.

Getting the most influential tech firms on board early is a big step in the right direction. Closely monitoring their actions to ensure they consistently align with this commitment is crucial as more innovations are made and AI continues to evolve.

U.K. Startup Eyes Satellite-Based Chip Manufacturing in Space, Promises Greater Efficiency

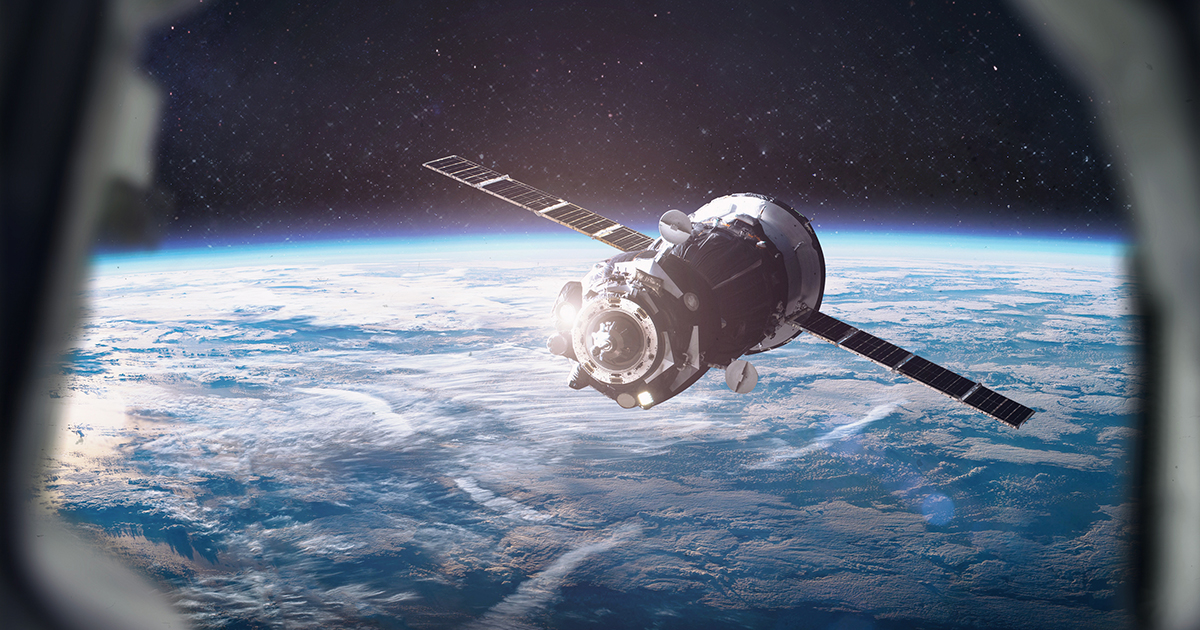

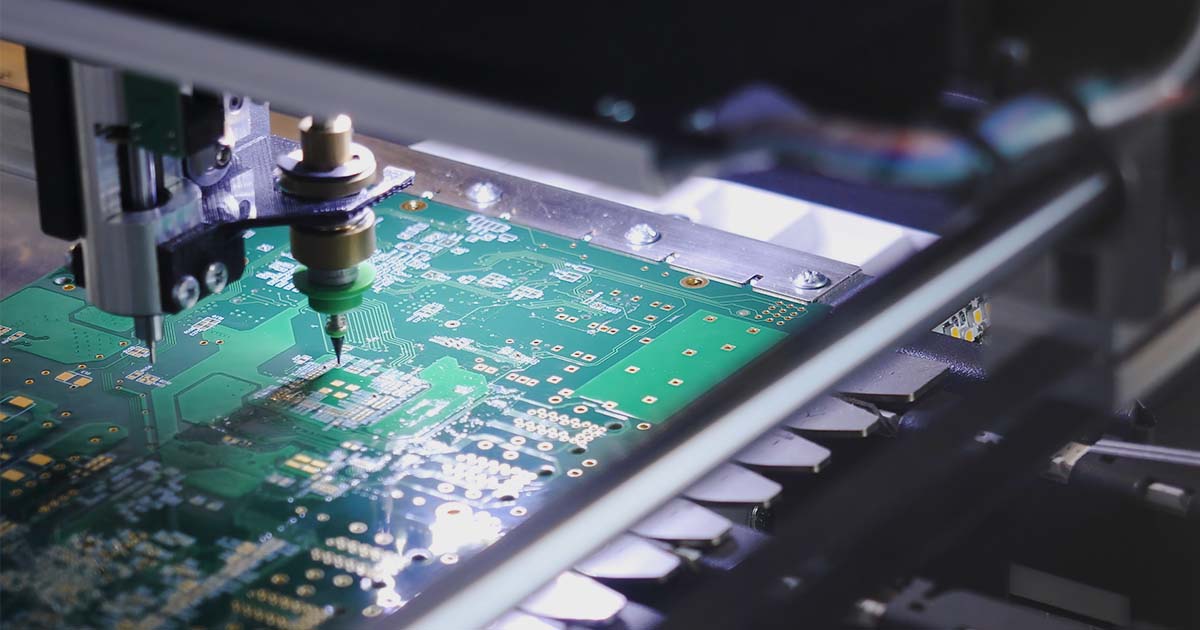

Producing high-quality semiconductors requires a precise manufacturing environment that costs billions of dollars to create. While the microgravity and vacuum of outer space are hostile to human life, they are perfect for making semiconductors. Some companies, including U.K.-based startup Space Forge, are exploring the possibility of manufacturing chips in orbit.

The startup’s ForgeStar-1 satellite is on its way to the U.S. and will be launched either late this year or early next year. This comes after its first attempt at a launch went awry when the Virgin Orbit rocket it was strapped to failed in January.

The satellite is roughly the size of a microwave but contains a powerful automated chemistry lab. Researchers on Earth will control the devices inside to mix chemical compounds and experiment with novel semiconductor alloys. They’ll be able to monitor how the substances behave in microgravity and the vacuum of space compared to their responses on Earth.

In a statement to Space.com, Space Forge CEO Josh Western said, “Producing compound semiconductors is a very intense and very slow process, they are literally grown by atoms, and so gravity has a profound effect… In space you’re able to overcome that barrier, because there is an absence of gravity.”

Of course, microgravity isn’t the only advantage of making chips in space. The process also benefits from the perfect vacuum—something chipmakers rely on expensive machinery to replicate on Earth to protect materials from contamination. Manufacturing chip materials in space negates the need for manmade vacuum equipment and ensures contaminants are non-existent.

Space Forge estimates that the favorable conditions of outer space make it possible to produce chips that are 10 to 100 times more efficient than those made on Earth. That’s a notable improvement that could have radical implications for the $500 billion semiconductor industry—especially considering that its size is expected to double by 2030.

Of course, making semiconductors in space isn’t as simple as it sounds. Materials produced in orbit would need to be safely returned to Earth for further processing and packaging. Protecting such sensitive materials on a rough and fiery trip back into the atmosphere is a major challenge.

Space Forge’s first satellite won’t even attempt this feat. Instead, it will beam experiment data back to Earth digitally for researchers to analyze. The startup does plan to return its satellites eventually, but Western says this isn’t in the forecast for another two to three years.

Although the prospect of making chips in space remains futuristic, this is an exciting development to monitor. Manufacturing semiconductors in space’s favorable environment could yield far more efficient chips in the years to come. As chipmakers seek new ways to produce the most advanced silicon and cash in on the growing industry’s demand, no approach is too outlandish to consider.

Moving Forward with Semiconductor Development – October 3, 2023

As tensions with China persist, chipmakers are looking for new ways to diversify their supply chains. This has put developing Asian nations in the spotlight. Perhaps none have shined as brightly as Vietnam, which the U.S. views as a key strategic partner. A major tech summit last month saw several billion-dollar business partnerships with U.S. chip firms inked.

Meanwhile, U.S. domestic chip ambitions remain strong. Private companies and public institutes alike are working to advance the speed of American chip innovation while also bolstering a workforce facing massive shortages. A new $46 billion investment from the National Science Foundation aims to address both of these goals as the U.S. continues expanding its stake in the chip sector.

Vietnam, U.S. Strengthen Ties Amid New Semiconductor Deals

Political and economic relations between the U.S. and Vietnam have been icy over the past few decades. However, this sentiment is changing thanks to hard work from government officials on both sides. The two countries have now agreed to billions of dollars in business partnerships following a major tech summit and diplomatic visit by U.S. President Joe Biden.

As countries and companies alike seek to diversify their supply chains away from China, developing nations in Asia have taken center stage. Thanks to cheap labor and regional accessibility to other chip operations, these countries offer the most convenient path to a more stable supply chain. Vietnam, which now sees the U.S. as its largest export market, has seized the opportunity to expand its role in the chip sector.

In a press conference, President Biden said, “We’re deepening our cooperation on critical and emerging technologies, particularly around building a more resilient semiconductor supply chain.”

“We’re expanding our economic partnership, spurring even greater trade and investment between our nations,” he added.

A number of key government officials joined executives from top tech and chip firms, including Google, Intel, Amkor, Marvell, and Boeing, at the Vietnam-U.S. Innovation and Investment Summit last month. The roundtable consisted of discussions on how to deepen partnerships and spark new investments.

Those talks are already paying dividends as several new business deals were announced. Leading the way was a $7.8 billion pledge from Vietnam Airlines to purchase 50 new 737 Max jets from Boeing.

In the chip sector, two new semiconductor design facilities are being built in Ho Chi Minh City. One will belong to Synopsys and the other to Marvell—both U.S.-based firms. At a broader level, the partnership seeks to “support resilient semiconductor supply chains for U.S. industry, consumers, and workers.”

These deals come shortly after Amkor announced a $1.6 billion chip fab near Vietnam’s capital of Hanoi. The company expects its new facility, which will primarily be used for assembly and packaging, to open sometime this month.

Despite this positive momentum, a dark cloud still hangs over the partnership. Vietnam’s chip workforce remains concerningly small. Currently, just 5,000 to 6,000 of the country’s roughly 100 million citizens are trained hardware engineers. Demand forecasts for the next five years project Vietnam will need 20,000 hardware engineers to fill an influx of new chip jobs. That number will more than double to 50,000 in ten years.

Without a robust pipeline of new chip talent, even the loftiest partnerships and investments could be in jeopardy. This reflects a similar chip worker shortage in the U.S., where 67,000 positions for technicians and engineers are expected to go unfilled by the end of the decade.

Even so, the increase in collaboration between Vietnam and the U.S. is a positive sign. Chipmakers are working to quickly decouple their supply chains from China as economic tensions with the country and its international trade partners sour. Hubs like Vietnam, India, and Malaysia have become central to these efforts. With each bit of political momentum, relocating chip production becomes easier.

The U.S.-Vietnam partnership is an important one to monitor in the coming months. Both Washington and Hanoi believe their new strategic partnership will usher in even more investment deals than those already revealed. Whether or not the latest wave of diplomacy is enough to entice investors and other chipmakers remains to be seen.

National Science Foundation Commits $45.6M to Support US Chip Industry

In a new wave of support arriving as a result of the CHIPS Act, the U.S. National Science Foundation (NSF) has pledged $45.6 million to support the domestic semiconductor industry. The public-private partnership brings in several top firms, including IBM, Ericsson, Intel, and Samsung. Notably, the support comes through the NSF’s Future of Semiconductors (FuSe) program.

NSF Director Sethuraman Panchanathan said in a statement following the investment, “By supporting novel, transdisciplinary research, we will enable breakthroughs in semiconductors and microelectronics and address the national need for a reliable, secure supply of innovative semiconductor technologies, systems, and professionals.”

The program funds are divided across 24 unique research and education projects with more than 60 awards going to 47 academic institutions. The grants are funded primarily by the NSF using dollars from the CHIPS Act with additional contributions coming from the partner companies. Each firm has pledged to provide annual support for the program through the NSF.

NSF leaders hope the investment will serve as a catalyst for chip breakthroughs. The FuSe program emphasizes a “co-design” approach to chip innovation, which considers the “performance, manufacturability, recyclability, and environmental sustainability” of materials and production methods.

Perhaps more important to U.S. semiconductor ambitions, though, is developing a robust chip workforce. Currently, the nation is lacking in this area with 67,000 chip jobs expected to be vacant by 2030. The NSF’s latest investment places a heavy emphasis on developing chip talent in America. As the industry works to shore up workforce gaps, collaboration is more essential than ever.

Panchanathan says the NSF’s $46 million investment will “help train the next generation of talent necessary to fill key openings in the semiconductor industry and grow our economy from the middle out and bottom up.”

Leveraging the expertise and support of industry partners is a key component of the FuSe program. Dr. Richard Uhlig, senior fellow and director of Intel Labs, said in a joint press release, “Implementing these types of programs across the country is an incredibly powerful way to diversify the future workforce and fill the existing skills gap.”

Meanwhile, President of Samsung Semiconductor in the U.S., Jinman Han, said, “Helping drive American innovation and generating job opportunities are critical to the semiconductor industry… As we grow our manufacturing presence here [in the U.S.], we look for partners like NSF that can help address the challenges at hand and drive progress in innovation while cultivating the semiconductor talent pipeline.”

Demand for semiconductors is growing around the world. As chipmakers seek to move their operations out of China amid tense economic conditions, the U.S. domestic industry is vying to reclaim its status as a global powerhouse. The CHIPS Act, passed in 2022, has sparked a new period of growth and innovation as demonstrated by initiatives like this one.

As the latest wave of NSF-funded projects roll out over the coming months, it will be exciting to see what sort of innovation they yield. Likewise, these essential programs will help inspire the next generation of chip talent in America.

Corporate Demand for AI is Driving Chip Market - September 19, 2023

The chip market has endured a tumultuous few years in the wake of the COVID-19 pandemic. Luckily, several factors within the tech industry are paving the way for a strong recovery and pattern of growth. From IoT devices to automakers eyeing electric vehicles, products across every sector need more chips. As the AI craze reaches full swing, chipmakers are turning record profits and preparing to use this as an opportunity for bolstered growth in the coming years.

AI, Increasing Tech Adoption Drive Chip Demand, Grow Market

The semiconductor industry appears well-positioned for a period of growth according to a report from the IMARC group which looks out as far as 2028. IMARC predicts the chip industry will reach $941.2 billion globally by 2028 and experience a growth rate (CAGR) of 7.5% from now until then.

So, what’s driving this steady incline for the industry? As the global economy leans more heavily on tech-centered products, the need for semiconductors rises. Given a push for more technology in offerings across sectors, chips are increasingly in demand—even in places they haven’t been traditionally.

The growing Internet of Things (IoT) is one major driver of growth. More and more of today’s devices are connected to the internet, enabling efficient communication and seamless collaboration. Of course, these devices require several components to stay connected, going beyond those required for their core function. Analysis firm Mordor Intelligence projects the IoT chip market to reach $37.9 billion by 2028, expanding significantly from its current $17.9 billion size.

Meanwhile, the automotive industry is also hungry for chips thanks primarily to the rise of electric vehicles (EVs). These cars rely on more components to power their complex software and hardware. Semiconductors are essential for key EV features like battery control, power management, and charging. Even non-electric vehicles are being built with more chips today than ever before. Consumers demand flashy, attractive features, which increases the number of chips needed to support them. As some companies lean into self-driving technology and advanced driver assistance programs, more advanced chips are required.

Indeed, the automotive industry has been one of the biggest chip buyers in recent years. Experts predict this trend will only increase as more carmakers and governments emphasize EVs, citing environmental concerns. Analysts project the auto sector will be the third largest buyer of semiconductors by 2030, accounting for $147 billion in annual revenue.

Artificial intelligence (AI) is perhaps the most influential technology in the world right now. Surging demand has chipmakers fighting to keep up as companies race to invest in AI. The rise of ChatGPT sparked interest in generative AI, catching the eye of major tech players like Microsoft and Google. Meanwhile, other machine learning applications are being explored across every industry, driving demand for high-power data center chips. Benzinga projects the

AI chip market will grow to $92.5 billion by 2028, a staggering increase from the $13.1 billion it was valued at in 2022.

Nivida has a massive head start on the market and currently is responsible for making the overwhelming majority of AI chips thanks to its prowess in the GPU market. Memory chipmakers are also benefiting as AI applications require high-speed DRAM units. The explosive growth of AI is driving demand for HBM3 memory chips and their successors.

Finally, an increased desire for high performance across devices has put chipmakers in a favorable position. Emerging technologies such as 5G and edge AI computing require advanced silicon and additional components to enable connectivity.

As the world continues to embrace technology in every facet of daily life, the chip industry must be ready. Addressing critical workforce shortages and ensuring manufacturing capacity is sufficient are key areas to watch in the coming years. Moreover, creating a more diverse supply chain that is more resilient in the face of economic disruption is essential. By working to solve these challenges, the chip industry can put itself in a place to succeed throughout the remainder of the decade.

Corporate Demand for ChatGPT is an Excellent Opportunity for Chipmakers

ChatGPT, the flagship offering of OpenAI, has revolutionized the way the world thinks about artificial intelligence (AI). The technology has started evolving at a rapid pace—and the industry powering it isn’t far behind. As tech giants gear up for an AI arms race over the next decade, chipmakers are racing to meet demand.

Now, OpenAI is working to monetize its AI golden child. The Microsoft-backed startup reportedly brings in $80 million each month. But it isn’t content to stop there. OpenAI recently introduced a new ChatGPT business offering, which gives corporate clients more privacy safeguards and additional features. The so-called ChatGPT Enterprise comes via a premium subscription which the company has not yet provided pricing details for.

OpenAI reportedly worked with more than 20 companies to test the product and find the most marketable solutions. Zapier, Canva, and Estee Lauder were all involved and remain early customers of the product. However, OpenAI claims that over 80% of Fortune 500 companies have also used its software since its launch late last year.

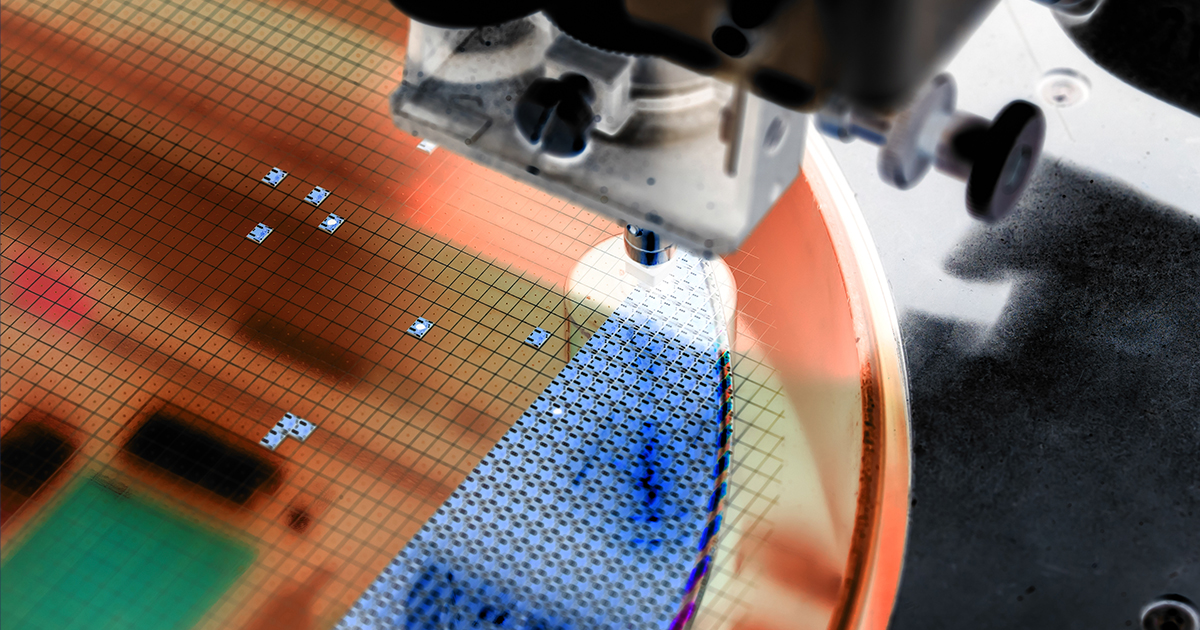

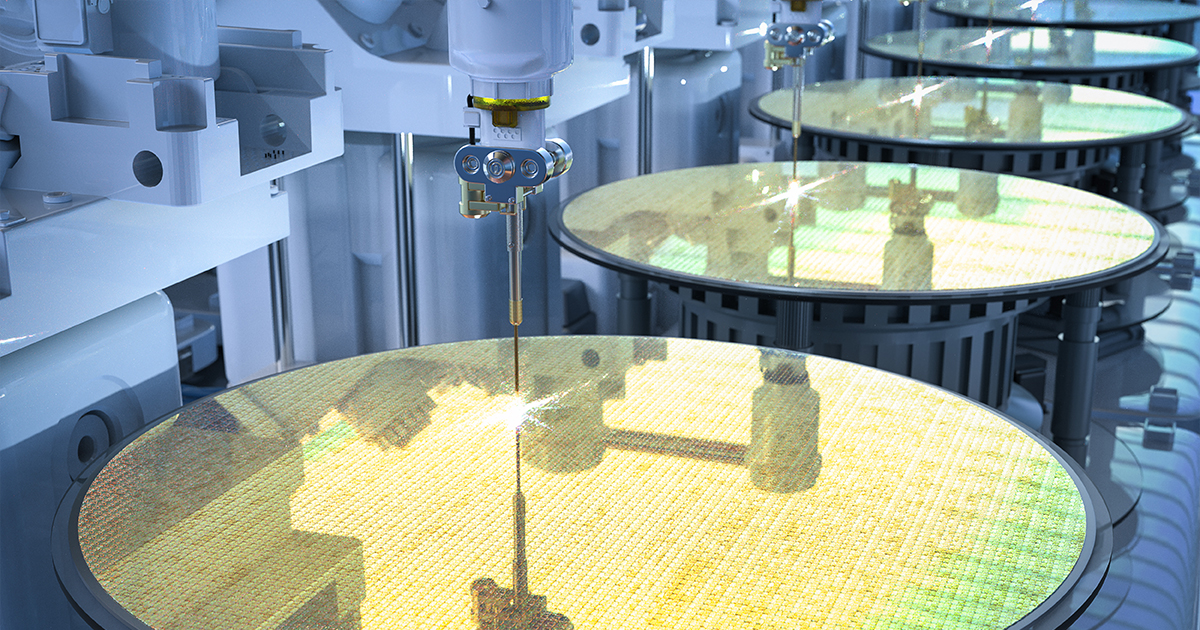

For the chip industry, AI represents a turning point. The technology is already being used to make chip manufacturing more efficient. Startups have tasked AI-powered computer vision programs with spotting defects in wafers during production. This method is both faster and more accurate than manually reviewing each wafer, resulting in greater revenue and production for chipmakers.

Elsewhere, researchers are beginning to explore how applying machine learning principles to the intricacies of chip design could one day result in more efficient, more powerful semiconductors than humans can create. Samsung is a pioneer in this area. The South Korean firm is already employing generative AI in hopes of competing with TSMC by increasing wafer yields.

Despite these promising applications, data from McKinsey shows just 30% of companies who currently use AI and machine learning see value from it. But this is expected to change quickly as more businesses experiment with AI and learn how to utilize it most effectively. The same report suggests the use of AI could generate $85 to $95 billion in the long term.

For chipmakers, using AI in-house isn’t the only factor to consider. As the world’s largest firms scramble to gain an advantage in the AI gold rush, their need for high-performance chips is dire. Without the right hardware, it’s impossible to train AI models and use them to generate and analyze profitable data. Firms who are able to provide the needed silicon will benefit tremendously.

As ChatGPT is so often an indicator of the wider AI market’s direction, don’t be surprised to see a big push to include AI in the office in the coming months. Startups of all sizes will introduce their offerings to businesses seeking to improve their efficiency and processes. Each day, more “real-world” uses for AI will crop up as startups who have hungrily devoured capital seek to start turning a profit. For chipmakers, the winners of the AI race aren’t what matters. Rather, the success or failure of AI as a concept and as a useful technology will dictate much of what the future looks like for chips. With some luck, it will be a key growth driver for the industry over the next ten years.

Semiconductors in Space! - September 5, 2023

The future is bright as technology continues to advance more rapidly than anyone can predict. AI leads the way as countries around the world strive to become proficient and find the next breakthroughs. Meanwhile, startups are looking to the stars as dreams of manufacturing higher-quality products in space inch closer to reality.

For the components industry, these developments are part of a larger trend of adaptation. As technology dictates how the world moves forward, the need for components is evolving but always present. Finding innovative ways to meet the demands of companies and countries pursuing advanced technology is paramount for years ahead.

U.K. Invests Millions in New AI Silicon but Eyes Chip Diplomacy as Path Forward

As the world turns its focus toward the exciting future of artificial intelligence (AI), every nation is racing to strengthen its digital capabilities. The U.K. recently announced an initiative that will see roughly $126 million poured into AI chips from AMD, Intel, and Nvidia. This move comes as part of a pledge made earlier this year to invest over $1.25 billion to bolster its domestic chip industry over the next 10 years.

However, critics of the move claim the government isn’t investing enough compared to other nations. Indeed, the U.K.’s latest investment is minuscule compared to those made elsewhere. The U.S. has invested $50 billion in its domestic semiconductor industry through the CHIPS Act while the E.U. has invested some $54 billion. Even so, the scope of the U.K.’s recent move shouldn’t be surprising, given that it accounts for just 0.5% of global semiconductor sales.

The recent injection of taxpayer money will be used to build a national AI resource. This will give AI researchers access to powerful computing resources and high-quality data to advance their work and the field. Other countries, including the U.S., are establishing similar programs to further their domestic AI capabilities.

The primary line item of the U.K.’s recent investment is reportedly an order of 5,000 GPUs from Nvidia, which are used to power generative AI data centers and are essential to running the complex calculations demanded by AI applications. The U.K. government is reportedly in advanced talks with Nvidia to secure these chips amid the company’s massive surge in international demand.

U.K. Prime Minister Rishi Sunak notes that Britain will focus on playing to its strengths rather than delving too far into areas where it is outmatched. For instance, the U.K. will devote a significant portion of its chip resources to research and design rather than building new fabs like many of its European neighbors.

Moreover, the U.K. seems poised to put itself in the center of the raging discussion surrounding AI safety. It recently announced that a long-awaited and highly publicized international AI safety summit will take place early this November. The meeting will include officials from “like-minded” governments as well as researchers and tech leaders. The group will convene at the historic Bletchley Park between Oxford and Cambridge, home of the National Museum of Computing and the birthplace of the first digital computer.

Interestingly, as the U.K.’s small investment compared to other nations will likely hinder its domestic chip ambitions, leadership in the regulatory space could be a fitting role. Britain reportedly aims to be a bridge between the U.S. and China for tense chip and AI safety discussions.

In a statement, a government spokesperson said, “We are committed to supporting a thriving environment for compute in the U.K. which maintains our position as a global leader across science, innovation, and technology.”

Meanwhile, China is racing to buy billions of dollars of GPU chips to further its own AI ambitions ahead of U.S. bans slated to go into effect in early 2024. At this time, it’s unclear if the U.K. will invite China to participate in its upcoming summit.

This will be an important development to watch as the U.K. aims to secure its position as a chip leader despite investing far less than other nations. While the U.S., Japan, Taiwan, and South Korea continue to dominate manufacturing, Britain could play a vital role in the future of the industry as a global moderator and champion of regulatory discussions.

How Manufacturing Chips and Drugs in Space Could Revolutionize Life on Earth

Outer space presents an environment for unique experiments that are simply impossible to perform on Earth. Astronauts living aboard the International Space Station (ISS) have been conducting such research for years. More recently, though, interest in manufacturing products in outer space has taken off.

From new pharmaceuticals to pure materials for semiconductors, the possibilities are endless. As a result, experts believe the space manufacturing industry could top $10 billion as soon as 2030. Startups and governments alike are racing to push the limits of this sector.

Manufacturing certain products on Earth, especially at a microscopic scale, is limited by factors like gravity and the difficulty of producing a reliable vacuum. In space, high radiation levels, microgravity, and a near vacuumless environment give researchers ample opportunity to produce materials or use research methods not available on Earth.

A Wales-based startup called Space Forge aims to revolutionize chip manufacturing by taking it into orbit. The company’s ForgeStar reusable satellite is designed to create advanced materials in space and return them safely to Earth.

Since crystals grown in space are of far higher quality than those grown on Earth, producing semiconductor materials in space leads to a final product with fewer imperfections. Andrew Parlock, Space Forge’s managing director of U.S. operations, said in an interview, “This next generation of materials is going to allow us to create an efficiency that we’ve never seen before. We’re talking about 10 to 100x improvement in semiconductor performance.”

The startup plans to manufacture chip substrates using materials other than silicon. In theory, this could lead to chips that outperform anything the world has seen to date while also running more efficiently.

As for concerns about manufacturing at scale, Space Forge CEO Josh Western says, “Once we’ve created these crystals in space, we can bring them back down to the ground and we can effectively replicate that growth on Earth. So, we don’t need to go to space countless times to build up pretty good scale operating with our fab partners and customers on the ground.”

As the semiconductor industry seeks new ways to make chips more efficient with current manufacturing technology nearing its limits, new materials made in space could be the answer. Though many years of research and testing will be needed, space manufacturing is a promising path for chip companies to explore.

Meanwhile, manufacturing drugs in space has also caught the attention of investors and researchers alike. Varda Space Industries relies on the unique ability to research and produce high-quality proteins in space through crystallization. This allows scientists to better understand a protein’s crystal structure so they can optimize drugs to be more effective, resilient, and have fewer side effects.

Varda co-founder and president Delian Asparouhov says, “You’re not going to see us making penicillin or ibuprofen… but there is a wide set of drugs that do billions and billions of dollars a year of revenue that actively fit within the manufacturing size that we can do.”

He notes that of all the millions of doses of the Pfizer COVID-19 vaccine given in 2021 and 2022, “the actual total amount of consumable primary pharmaceutical ingredient of the actual crystalline mRNA, it was effectively less than two milk gallon jugs.”

Once again, this alleviates concerns of producing drugs in space at scale. Rather than making the entire drug in space, companies like Varda will focus on making the most important components. Then, they’ll ship those back to Earth to complete the manufacturing process.

Thanks to recent advancements in spaceflight technology, such as reusable rockets, making missions to orbit cheaper, dreams of in-space manufacturing are inching closer to reality. Advancements in the coming years will help set the groundwork for what could be the new normal for manufacturing, one that allows humanity to go beyond the limits of what we can create on our planet.

AI is Running the Chip Industry – August 25, 2023

No technology currently has a greater influence on the semiconductor industry than artificial intelligence (AI). From generative models like ChatGPT to massive data centers powering in-house algorithms, AI has sent demand for high-end silicon through the roof.

With demand soaring, chipmakers are scrambling to keep up and expand their production capacities. Cutting-edge AI solutions demand high levels of performance and optimized power efficiency. So, churning out advanced chips is a top priority for manufacturers across segments. Some chipmakers are even turning to an unlikely source for answers to stringent design challenges for future chips—the AI algorithms themselves.

As the chip industry grapples with the possibilities and limitations of AI, the technology’s influence is already redefining the landscape.

AMD Assures Production Capacity for Key AI Chips Despite Tight Market

AMD CEO Lisa Su had reassuring words for analysts and investors during the company’s second-quarter earnings call. Amid a booming market for AI chips, Su admitted that production capacity is tight, but that her company is poised to meet demand following numerous discussions with supply chain partners in Asia earlier this year.

She said in the earnings report, “Our AI engagements increased by more than seven times in the quarter as multiple customers initiated or expanded programs supporting future deployments of Instinct accelerators at scale.”

Large language models (LLMs) like ChatGPT have brought AI, particularly generative AI, to the forefront of the public’s eye. Companies in every sector are scrambling to get their hands on the necessary tech to keep up. For AMD, the generative AI application market and data centers are key strategic focal points.

With many AMD customers reportedly interested in the MI300x, it’s no surprise they hope to deploy the solution as soon as possible—despite the fact that AMD’s M-series GPUs were announced just a month ago. In the meantime, AMD is working closely with its buyers to ensure joint design progress and those deployments go smoothly as it begins sampling the new line.

Su noted in the Q2 earnings call that AMD has secured the necessary production capacity to manufacture its MI300-level GPUs—including front-end wafer manufacturing to back-end packaging. The firm is committed to taking in the massive capacity of neighboring supply chains, including TSMC’s high-bandwidth memory (HBM) and CoWoS advanced packaging. Over the next two years, AMD also plans to rapidly scale up its production capacity to meet soaring customer demand for AI hardware.

In the earnings report, Su said, “We made strong progress meeting key hardware and software milestones to address the growing customer pull for our data center AI solutions and are on track to launch and ramp production of MI300 accelerators in the fourth quarter.”